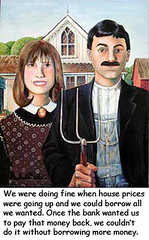

and this one...

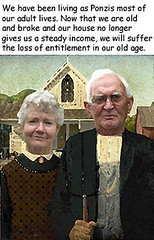

and this one...

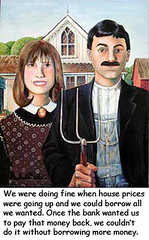

and this one...

When things like this [cycles] happen in nature - like the Earth going around the Sun, or a ball bouncing on a spring, or water undulating up and down - it comes from some sort of restorative force. With a restorative force, being up high is what makes you more likely to come back down, and being low is what makes you more likely to go back up. Just imagine a ball on a spring; when the spring is really stretched out, all the force is pulling the ball in the direction opposite to the stretch. This causes cycles.I think this is interesting and deserves some further discussion. Take an ordinary pendulum. Give such a system a kick and it will swing for a time but eventually the motion will damp away. For a while, high now does portend low in the near future, and vice versa. But this pendulum won't start start swinging this way on its own, nor will it persist in swinging over long periods of time unless repeatedly kicked by some external force.

It's natural to think of business cycles this way. We see a recession come on the heels of a boom - like the 2008 crash after the 2006-7 boom, or the 2001 crash after the late-90s boom - and we can easily conclude that booms cause busts.

So you might be surprised to learn that very, very few macroeconomists think this! And very, very few macroeconomic models actually have this property.

In modern macro models, business "cycles" are nothing like waves. A boom does not make a bust more likely, nor vice versa. Modern macro models assume that what looks like a "cycle" is actually something called a "trend-stationary stochastic process" (like an AR(1)). This is a system where random disturbances ("shocks") are temporary, because they decay over time. After a shock, the system reverts to the mean (i.e., to the "trend"). This is very different from harmonic motion - a boom need not be followed by a bust - but it can end up looking like waves when you graph it...

Minksy conjectures that financial markets begin to build up bubbles as investors become increasingly overconfident about markets. They begin to take more aggressive positions, and can often start to increase their leverage as financial prices rise. Prices eventually reach levels which cannot be sustained either by correct, or any reasonable forecast of future income streams on assets. Markets reach a point of instability, and the over extended investors must now begin to sell, and are forced to quickly deleverage in a fire sale like situation. As prices fall market volatility increases, and investors further reduce risky positions. The story that Minsky tells seems compelling, but we have no agreed on approach for how to model this, or whether all the pieces of the story will actually fit together. The model presented in this paper tries to bridge this gap.The model is in crude terms like many I've described earlier on this blog. The agents are adaptive and try to learn the most profitable ways to behave. They are also heterogeneous in their behavior -- some rely more on perceived fundamentals to make their investment decisions, while others follow trends. The agents respond to what has recently happened in the market, and then the market reality emerges out of their collective behavior. That reality, in some of the runs LeBaron explores, shows natural, irregular cycles of bubbles and subsequent crashes of the sort Minsky envisioned. The figure below, for example, shows data for the stock price, weekly returns and trading volume as they fluctuate over a 10 year period of the model:

The large amount of wealth in the adaptive strategy relative to the fundamental is important. The fundamental traders will be a stabilizing force in a falling market. If there is not enough wealth in that strategy, then it will be unable to hold back sharp market declines. This is similar to a limits to arbitrage argument. In this market without borrowing the fundamental strategy will not have sufficient wealth to hold back a wave of self-reinforcing selling coming from the adaptive strategies.Another important point, which LeBaron mentions in the paragraph above, is that there's no leverage in this model. People can't borrow to amplify investments they feel especially confident of. Leverage of course plays a central role in the instability mechanism described by Minsky, but it doesn't seem to be absolutely necessary to get this kind of instability. It can come solely from the interaction of different agents following distinct strategies.

The dynamics are dominated by somewhat irregular swings around fundamentals, that show up as long persistent changes in the price/dividend ratio. Prices tend to rise slowly, and then crash fast and dramatically with high volatility and high trading volume. During the slow steady price rise, agents using similar volatility forecast models begin to lower their assessment of market risk. This drives them to be more aggressive in the market, and sets up a crash. All of this is reminiscent of the Minksy market instability dynamic, and other more modern approaches to financial instability.

Instability in this market is driven by agents steadily moving to more extreme portfolio positions. Much, but not all, of this movement is driven by risk assessments made by the traders. Many of them continue to use models with relatively short horizons for judging market volatility. These beliefs appear to be evolutionarily stable in the market. When short term volatility falls they extend their positions into the risky asset, and this eventually destabilizes the market. Portfolio composition varying from all cash to all equity yields very different dynamics in terms of forced sales in a falling market. As one moves more into cash, a market fall generates natural rebalancing and stabilizing purchases of the risky asset in a falling market. This disappears as agents move more of their wealth into the risky asset. It would reverse if they began to leverage this position with borrowed money. Here, a market fall will generate the typical destabilizing fire sale behavior shown in many models, and part of the classic Minsky story. Leverage can be added to this market in the future, but for now it is important that leverage per se is not necessary for market instability, and it is part of a continuum of destabilizing dynamics.

Q (Baum): So how did adaptive expectations morph into rational expectations?I think this is exactly the issue: "fear of uncertainty". No science can be effective if it aims to banish uncertainty by theoretical fiat. And this is what really makes rational expectations economics stand out as crazy when compared to other areas of science and engineering. It's a short interview, well worth a quick read.

A (Phelps): The "scientists" from Chicago and MIT came along to say, we have a well-established theory of how prices and wages work. Before, we used a rule of thumb to explain or predict expectations: Such a rule is picked out of the air. They said, let's be scientific. In their mind, the scientific way is to suppose price and wage setters form their expectations with every bit as much understanding of markets as the expert economist seeking to model, or predict, their behavior. The rational expectations approach is to suppose that the people in the market form their expectations in the very same way that the economist studying their behavior forms her expectations: on the basis of her theoretical model.

Q: And what's the consequence of this putsch?

A: Craziness for one thing. You’re not supposed to ask what to do if one economist has one model of the market and another economist a different model. The people in the market cannot follow both economists at the same time. One, if not both, of the economists must be wrong. Another thing: It’s an important feature of capitalist economies that they permit speculation by people who have idiosyncratic views and an important feature of a modern capitalist economy that innovators conceive their new products and methods with little knowledge of whether the new things will be adopted -- thus innovations. Speculators and innovators have to roll their own expectations. They can’t ring up the local professor to learn how. The professors should be ringing up the speculators and aspiring innovators. In short, expectations are causal variables in the sense that they are the drivers. They are not effects to be explained in terms of some trumped-up causes.

Q: So rather than live with variability, write a formula in stone!

A: What led to rational expectations was a fear of the uncertainty and, worse, the lack of understanding of how modern economies work. The rational expectationists wanted to bottle all that up and replace it with deterministic models of prices, wages, even share prices, so that the math looked like the math in rocket science. The rocket’s course can be modeled while a living modern economy’s course cannot be modeled to such an extreme. It yields up a formula for expectations that looks scientific because it has all our incomplete and not altogether correct understanding of how economies work inside of it, but it cannot have the incorrect and incomplete understanding of economies that the speculators and would-be innovators have.

Since the beginning of banking the possibility of a lender to assess the riskiness of a potential borrower has been essential. In a rational world, the result of this assessment determines the terms of a lender-borrower relationship (risk-premium), including the possibility that no deal would be established in case the borrower appears to be too risky. When a potential borrower is a node in a lending-borrowing network, the node’s riskiness (or creditworthiness) not only depends on its financial conditions, but also on those who have lending-borrowing relations with that node. The riskiness of these neighboring nodes depends on the conditions of their neighbors, and so on. In this way the concept of risk loses its local character between a borrower and a lender, and becomes systemic.In this connection, recall Alan Greenspan's famous admission that he had trusted in the ability of rational bankers to keep markets working by controlling their counterparty risk. As he exclaimed in 2006,

The assessment of the riskiness of a node turns into an assessment of the entire financial network [1]. Such an exercise can only carried out with information on the asset-liablilty network. This information is, up to now, not available to individual nodes in that network. In this sense, financial networks – the interbank market in particular – are opaque. This intransparency makes it impossible for individual banks to make rational decisions on lending terms in a financial network, which leads to a fundamental principle: Opacity in financial networks rules out the possibility of rational risk assessment, and consequently, transparency, i.e. access to system-wide information is a necessary condition for any systemic risk management.

"Those of us who have looked to the self-interest of lending institutions to protect shareholder's equity -- myself especially -- are in a state of shocked disbelief."The trouble, at least partially, is that no matter how self-interested those lending institutions were, they couldn't possibly have made the staggeringly complex calculations required to assess those risks accurately. The system is too complex. They lacked necessary information. Hence, as Thurner and Poledna point out, we might help things by making this information more transparent.

In most developed countries interbank loans are recorded in the ‘central credit register’ of Central Banks, that reflects the asset-liability network of a country [5]. The capital structure of banks is available through standard reporting to Central Banks. Payment systems record financial flows with a time resolution of one second, see e.g. [6]. Several studies have been carried out on historical data of asset-liability networks [7–12], including overnight markets [13], and financial flows [14].I wrote a little about this DebtRank idea here. It's a computational algorithm applied to a financial network which offers a means to assess systemic risks in a coherent, self-consistent way; it brings network effects into view. The technical details aren't so important, but the original paper proposing the notion is here. The important thing is that the DebtRank algorithm, along with the data provided to central banks, makes it possible in principle to calculate a good estimate of the overall systemic risk presented by any bank in the network.

Given this data, it is possible (for Central Banks) to compute network metrics of the asset-liability matrix in real-time, which in combination with the capital structure of banks, allows to define a systemic risk-rating of banks. A systemically risky bank in the following is a bank that – should it default – will have a substantial impact (losses due to failed credits) on other nodes in the network. The idea of network metrics is to systematically capture the fact, that by borrowing from a systemically risky bank, the borrower also becomes systemically more risky since its default might tip the lender into default. These metrics are inspired by PageRank, where a webpage, that is linked to a famous page, gets a share of the ‘fame’. A metric similar to PageRank, the so-called DebtRank, has been recently used to capture systemic risk levels in financial networks [15].

The idea is to reduce systemic risk in the IB network by not allowing borrowers to borrow from risky nodes. In this way systemically risky nodes are punished, and an incentive for nodes is established to be low in systemic riskiness. Note, that lending to a systemically dangerous node does not increase the systemic riskiness of the lender. We implement this scheme by making the DebtRank of all banks visible to those banks that want to borrow. The borrower sees the DebtRank of all its potential lenders, and is required (that is the regulation part) to ask the lenders for IB loans in the order of their inverse DebtRank. In other words, it has to ask the least risky bank first, then the second risky one, etc. In this way the most risky banks are refrained from (profitable) lending opportunities, until they reduce their liabilities over time, which makes them less risky. Only then will they find lending possibilities again. This mechanism has the effect of distributing risk homogeneously through the network.The overall effect in the interbank market would be -- in an idealized model, at least -- to make systemic banking collapses much less likely. Thurner and Poledna ran a number of agent-based simulations to test out the dynamics of such a market, with encouraging results. The model involves banks, firms and households and their interactions; details in the paper for those interested. Bottom line, as illustrated in the figure below, is that cascading defaults through the banking system become much less likely. Here the red shows the statistical likelihood over many runs of banking cascades of varying size (number of banks involved) when borrowing banks choose their counterparties at random; this is the "business as usual" situation, akin to the market today. In contrast, the green and blue show the same distribution if borrowers instead sought counterparties so as to avoid those with high values of DebtRank (green and blue for slightly different conditions). Clearly, system wide problems become much less likely.